How to track noindex reasons in large websites: A practical approach

The challenge: testing noindex rules on a Dev Environment While working on a large website, I faced an issue: new noindex rules couldn’t be properly tested because the entire dev environment was set to noindex. This made it impossible to determine whether a specific page was noindexed due to a global setting or because of…

The challenge: testing noindex rules on a Dev Environment

While working on a large website, I faced an issue: new noindex rules couldn’t be properly tested because the entire dev environment was set to noindex. This made it impossible to determine whether a specific page was noindexed due to a global setting or because of new business logic rules.

My solution? Add a unique ID or reason to the noindex meta tag to make it clear why a page was excluded from search engines.

Use Case: A Recipe Website with Profile Pages

Let’s take the example of a recipe website that also has user profile pages. The indexing strategy follows specific business logic:

Indexing Rules:

- Recipe pages should always be indexed (reason:

recipe). - User profiles follow a tiered system:

- Gold profiles should be indexed.

- Silver and Bronze profiles should be noindexed.

- The entire dev environment should always be noindexed (reason:

is-dev).

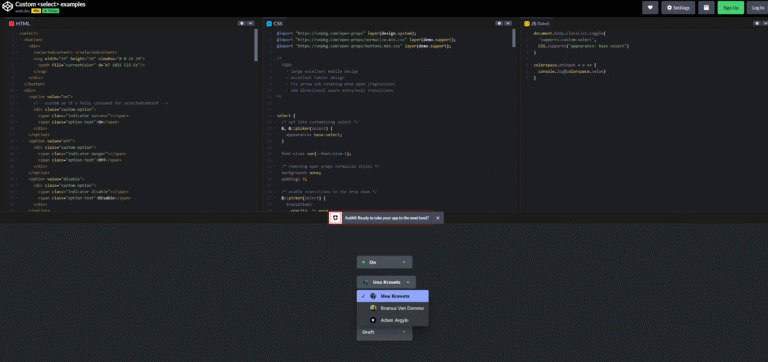

By adding a data attribute to the meta robots tag, we can track exactly why a page is noindexed:

<!-- Recipe page (indexed) -->

<meta name="robots" content="index, follow" data-reason="recipe">

<!-- Gold profile (indexed) -->

<meta name="robots" content="index, follow" data-reason="gold-profile">

<!-- Silver profile (noindexed) -->

<meta name="robots" content="noindex, nofollow" data-reason="silver-profile">

<!-- Bronze profile (noindexed) -->

<meta name="robots" content="noindex, nofollow" data-reason="bronze-profile">

<!-- Dev environment (noindexed) -->

<meta name="robots" content="noindex, nofollow" data-reason="is-dev">Using Numeric Codes or JIRA Ticket IDs

If your website uses complex business rules, you might want to use numeric codes or JIRA ticket IDs instead of text descriptions. This helps with automated tracking, logging, and debugging.

Example:

<meta name="robots" content="noindex, nofollow" data-reason="JIRA-12345">In this case, JIRA-12345 refers to a documented decision in your issue-tracking system, making it easy to understand why the noindex rule was applied.

Benefits of This Approach

✅ Easier Debugging – You can instantly see the reason a page is noindexed.

✅ Automated Auditing – Tools like Screaming Frog can extract the data-reason values for SEO reports.

✅ Scalability – Works well for large sites with many noindex rules.

✅ Collaboration – Developers, marketers, and SEOs all have clear visibility into indexation decisions.

Final Thoughts

This method provides a simple yet effective way to track noindex rules in large-scale websites. By adding a unique identifier, debugging and SEO monitoring become much more manageable.

If you’re struggling with testing noindex rules on a dev environment, try this approach—it could save you hours of frustration!